Data analysts report spending around 80% of their time collecting, organizing, and cleaning data. This is time that could be better spent on value-added activities.

To address this issue, implementing ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) processes becomes highly important. These processes make it easier to turn raw data into meaningful information.

In this article, we will dive into the ELT process, exploring how it works, its benefits, and the challenges it presents. We’ll also look at the best practices and use cases to help you make the most of ELT for your data needs.

What is ELT?

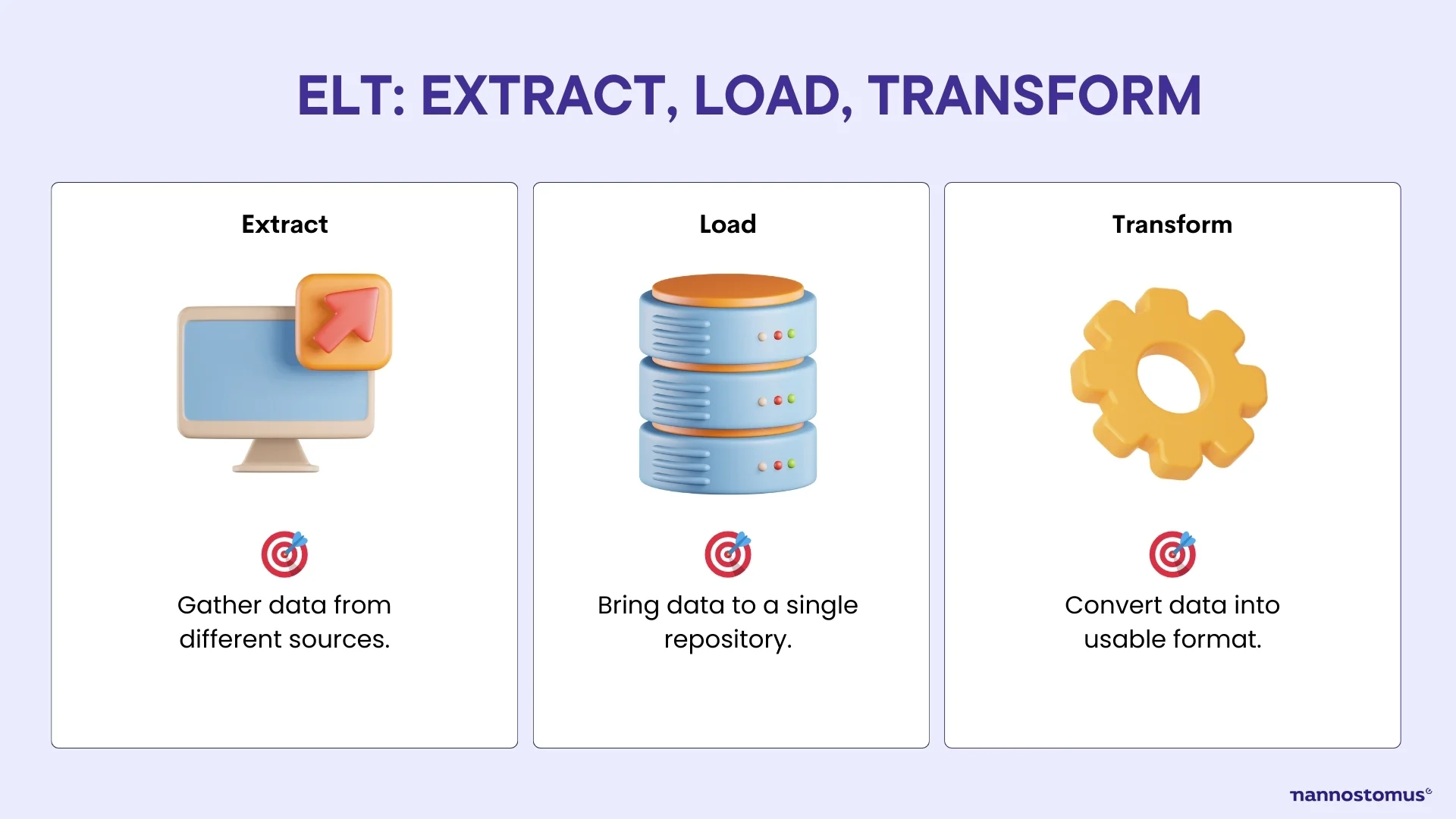

As we’ve already said, ELT stands for Extract, Load, Transform. It is a process used in data management and analytics. To better explain the ELT meaning, let’s look at its three main steps:

- Extract. Data is collected from various sources—websites, databases, spreadsheets, or cloud storage.

- Load. Data is loaded into a central repository, often a data warehouse or a data lake.

- Transform. Data is converted within this central location to fit the needs of analysis.

Based on the ELT data meaning, we can conclude that the key idea behind ELT is to leverage modern data storage and processing technologies. By performing transformations within the data repository, it can handle large volumes of data more efficiently. This approach also allows for more flexible and complex data transformations.

How does extract transformation load work?

The ELT process involves three main steps: extraction, loading, and transformation. Here’s how each step works.

1.Extraction

This is the first step where data is collected. The goal is to gather all relevant data and prepare it for loading into a central repository. The extracted data is often raw and unstructured at this stage.

However, this step can be challenging due to several factors.

- Data variety. Data often comes from multiple sources—relational databases, NoSQL databases, APIs, flat files, and websites. Each source has different formats, structures, and access protocols, making it difficult to standardize the extraction process.

- Data quality. The extracted data may have inconsistencies, missing values, or errors. And poor-quality data leads to inaccurate analyses and insights.

- Data volume. With the increasing amount of data generated by applications and devices, handling large volumes of data during extraction can be demanding. The process needs to be scalable to manage this data.

- Network issues. Extracting data from remote sources can be affected by network latency, bandwidth limitations, or connectivity problems. These issues can slow down the extraction process.

At the end of the extraction step, you have aggregated raw data from different places. This data is often unstructured and might contain inconsistencies or errors. Despite these potential issues, the extracted data is now centrally located and ready for the next step: loading.

2.Loading

The loading step is the second stage of the extract load transform ELT process, where the extracted data is moved into a central repository—a data warehouse or data lake. This step ensures that all collected data is stored in a single location, ready for transformation and analysis. However, it comes with its own set of challenges.

- Data volume and storage. Managing large volumes of data can strain storage systems. So, you’ve got to ensure there is enough capacity to handle the data without performance degradation.

- Data consistency. As data is loaded from different sources, it’s important to verify that all data has been loaded correctly and is synchronized with the source systems.

- Performance. Loading large datasets can be time-consuming and resource-intensive. At Nannostomus, we’ve optimized the loading process thanks to our dedicated web scraping software, so the cost of the record is around $0,0001.

- Error handling. During the loading process, errors can occur due to network failures, incorrect data formats, or system crashes. That’s why you should implement robust error-handling mechanisms to detect, log, and rectify these errors. As a result, all the extracted data is successfully stored in the central repository. This repository can handle large volumes of raw data and is designed to support complex data processing and analysis tasks. The data remains in its original form, ready for the next step: transformation.

3.Transformation

This is the final stage of the ELT process. Here, raw data is converted into a structured and usable format. This step involves cleaning, organizing, and modifying the data to meet the requirements of analysis and reporting. However, transforming data can present several challenges.

- Complexity. Transforming data often requires complex operations: joining tables, filtering records, aggregating data, and applying business rules.

- Scalability. As data volumes grow, transformation processes must scale accordingly. Ensuring that the system can handle increasing amounts of data without performance degradation is a key challenge. By the end of this step, the raw data is cleaned, structured, and ready for analysis. The transformed data is now consistent, high-quality, and tailored to meet the specific needs of the organization. It is stored in the central repository, where it can be accessed by data analysts, business intelligence tools, and other applications.

What is the difference between ETL and ELT?

ETL and ELT are both used for managing data, but they differ in the order of operations and the technologies they leverage. Here’s a closer look at the main difference between ETL and ELT.

| ETL | ELT | |

|---|---|---|

| Operation order | Data is first extracted, then transformed into a suitable format, and finally loaded into a data warehouse or database. The transformation occurs before the data is loaded into the central repository. | Data is first extracted and loaded into the central repository in its raw form. The transformation takes place within the data warehouse or data lake. This allows for more complex and flexible transformations using the storage system’s processing power. |

| Technology and tools | Dedicated extraction transformation loading tools to handle the transformation process before loading the data. These tools often run on separate servers, which can limit the scalability and speed of the transformations. | Extract load transform tools include modern data storage and processing technologies—cloud-based data warehouses and data lakes. These systems handle large volumes of data and perform complex transformations within the storage system itself. |

| Performance and scalability | Because transformations occur before loading, the ETL process can be slower and less scalable, especially when dealing with large datasets. The performance is often limited by the capabilities of the ETL tools and the hardware on which they run. | By performing transformations within the data warehouse or data lake, ELT takes advantage of the powerful processing capabilities of these systems. This makes ELT more scalable and better suited for handling large and complex datasets. |

| Flexibility | The transformation step in database extract transform load requires predefined schemas and structures. This can limit flexibility, as any changes to the data structure require modifications to the ETL process. | Provides greater flexibility, as raw data is stored in the central repository and transformations can be applied as needed. This allows for more dynamic and iterative data processing, making it easier to adapt to changing business requirements. |

| Use cases | Often used in traditional data warehousing environments where data volumes are moderate, and predefined transformations are sufficient. | ELT is more suitable for modern, cloud-based data environments where large volumes of data need to be processed quickly and flexibly. It is ideal for big data analytics and real-time data processing. |

So, while both ELT vs ETL aim to transform raw data into meaningful insights, they differ in their approach, technology, and suitability for various data processing needs.

Extract load transform examples

ELT is particularly suited for modern data environments that require flexibility, scalability, and the ability to handle large volumes of data. Here are three common use cases where ELT shines.

Big data analytics

A large e-commerce company wants to analyze customer behavior to improve product recommendations and marketing strategies.

Process

The company collects data from website logs, transaction records, and review platforms, and emails. This data is extracted and loaded into a data lake in its raw form. Once in the data lake, the data is transformed using the processing capabilities of the data lake to clean, organize, and merge it with other relevant datasets.

Benefits

ELT allows the company to handle the large volume and variety of data. By transforming data within the data lake, the company can perform complex analyses and generate insights quickly.

Real-time data processing

A financial company needs to monitor and analyze real-time trading data to detect fraudulent activities and make quick trading decisions.

Process

The firm extracts streaming data from trading platforms and financial markets and loads it directly into a cloud-based data warehouse. The data warehouse processes the data in real-time, applying necessary transformations to detect patterns and anomalies.

Benefits

With ELT extract load transform, the company can leverage the high processing power of the data warehouse to perform real-time analytics. This enables the firm to detect and respond to fraudulent activities promptly and make informed trading decisions.

IoT data management

A smart city initiative aims to use data from various IoT sensors to optimize traffic flow and reduce energy consumption.

Process

Data from thousands of IoT sensors—traffic cameras, air quality monitors, and energy meters—is extracted and loaded into a centralized data lake. The raw data is then transformed within the data lake to create structured datasets that can be used for analysis and visualization.

Benefits

ELT allows the smart city project to handle the high volume and variety of IoT data. The flexibility of ELT enables to adapt to new data sources and changing requirements, providing valuable insights to improve urban planning and resource management.

Conclusion

The ELT process—Extract, Load, Transform—offers an efficient way to handle large volumes of data from a vast array of sources. By loading data into a central repository before transforming it, ELT is more flexible and scalable compared to the traditional ETL process.

For those looking to optimize their data processes, partnering with experts can make a difference. At Nannostomus, we specialize in providing tailored ELT solutions to meet your unique data needs. Let us help you transform your raw data into valuable insights that drive informed decision-making and business success.