Nannostomus web screen scraping tool is a software package for companies and freelance developers. It’s a ready-to-go solution that makes collecting data easy and cost-effective. Ideal for businesses large and small, the Nannostomus tool lets you tap into web data, without hiring senior staff or investing in expensive tech.

But what is a web scraper tool by Nannostomus? And how can you leverage it effectively? Keep reading to discover the answers.

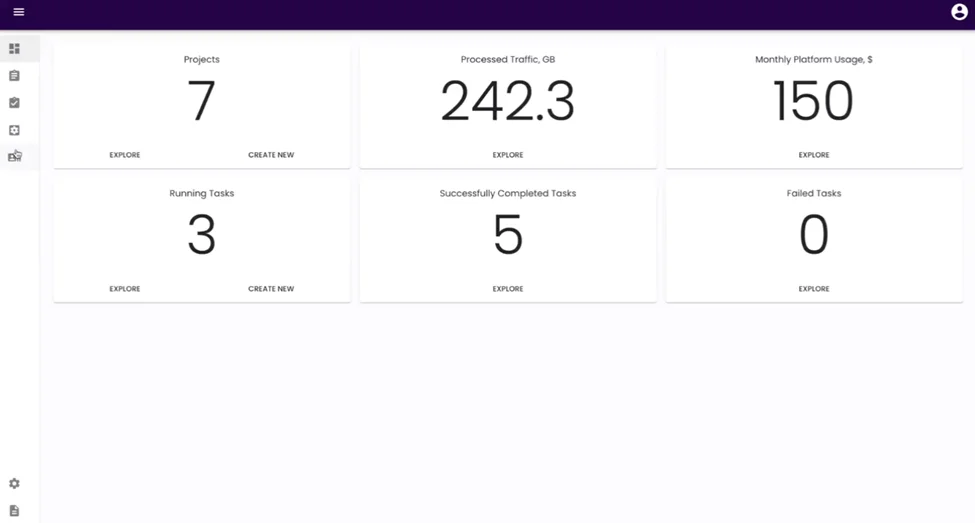

What’s inside the Nannostomus console?

Inside Nannostomus, there are five modules:

- Dashboard. Offers users a view of critical metrics: number of active projects, processed traffic, monthly usage, and task statuses.

- Projects. Gives detailed information about all ongoing and completed projects, their names, codes, statuses, and more. Here, you can also create, update, or delete projects.

- Tasks. Allows users to manage tasks: start, stop, and track all tasks in real time.

- Modules. Here, developers can integrate new modules into the system. It also has information about each module, namely its functionality and usage.

- Users. Provides administrative capabilities for creating and managing users. There is also access control, where admins can enable or disable users.

Depending on your user group, you may have limited access to some of these modules. For example, Admin can use all five modules, as well as add, enable, and disable users. As a Developer, you’ll be able to create or add modules. In the role of the Customer, you get access to create, check, and edit projects, as well as create and run tasks. But you won’t see the Users or Modules blocks.

The best web scraping tool for efficient budget-friendly data collection

Nannostomus enables you to create scrapers for automatically collecting information from websites.

These web scraper tools visit web pages, just like a person would, and then grab pieces of data that you need. This can be anything from product prices, contact information, to text and images. Essentially, web scrapers help you gather large amounts of data quickly, without having to copy and paste information manually.

But since there are complex websites—with dynamic content, IP blocking measures, or other mechanism for stopping automated data extraction—our solution includes other essential tools for web scraping. Nannostomus equips you with everything you need to automate and accelerate your data gathering processes. Let’s look at the core features of our software.

C# library

The main objective of bringing our web screen scraping tools to the market is to make automated data collection accessible to everyone.

Nannostomus is the easiest web scraping tool with the C# code library for building scrapers. It includes code snippets that allow even a junior-level developer to create efficient bots for extracting data from any source. Thus, you don’t have to hire high-lever software engineers for complex scraping projects.

C# is the flagship language for .NET, the framework we use for development. We’ve chosen this stack for a few reasons:

- We reuse time-tested code snippets written by our developers for .NET. This cuts the scraper development time. Thus, saves associated costs for our clients.

- High-speed execution for timely data extraction.

- A framework with an automated error-handling system for consistent data scraping.

- Suitable for scraping data from diverse sources.

- Advanced security features to protect both our scraping tools and the websites we interact with.

- Integrated with AWS (the cloud service we use) for scalable and reliable scraping.

With the code templates available in our library, everyone with the basic understanding of C# can use our automated web scraping tools for efficient data collection.

Resource management

Resource management is the core feature of our Nannostomus online web scraper tool. Since AWS (the cloud provider we use) charges based on computing resources used, ensuring each virtual machine is utilized near its full capacity means you’re getting the most out of your investment. So, our console with resource management capabilities allows balancing the load of various virtual machines that operate scrapers.

Here is how it works:

- Each virtual machine is assessed for its processing power and current load.

- Tasks are scheduled based on priority, task type, and resource usage.

- To prevent overloading the websites and to comply with rate limits, tasks are timed so that requests are spread out evenly.

- The system continually monitors the load on each machine and dynamically redistributes tasks as needed. For example, if a computer finishes its queue early or another VM is running slower due to complex pages, the system will reallocate tasks in real-time.

- If the system detects that the load consistently exceeds the current capacity, it will automatically request more VMs from AWS to handle the increased demand. Conversely, during periods of low demand, the system will reduce the number of active VMs.

- There are detailed reports on resources, types of work, and costs.

As a result, running web scrapers turns to be highly efficient and cost-effective. For example, with our best tools for web scraping, we deliver a budget-friendly service to our clients. As we collect sex offender data, 1 million records (with images) cost us only $100. So, one record is estimated at $0,0001.

Batch website processing

Frequently, our clients approached us with the request for bulk website scraping. That is, they wanted to get as much information as possible from multiple websites.

To ensure cost-effective data collection at scale, we’ve integrated the batch website processing feature into our web scraping online tool. This capability allows users to manage and extract data from multiple websites simultaneously, which is particularly valuable for comprehensive data collection tasks across a vast array of sources.

For instance, your project involves gathering detailed information from 100 e-commerce websites—prices, product descriptions, images, customer reviews, and technical specifications. Using our automated web scraping tool, you’ll grasp all the data in a streamlined way.

Services

The Nannostomus web data scraper tool is equipped with robust services for tackling complex websites. These proprietary solutions are crucial for handling sophisticated web scraping projects that involve navigating challenging website security measures.

Our tool uses a network of proxies that allows your scrapers to access websites from various IP addresses. This is particularly useful when dealing with websites that have strict access controls or when collecting data from geographically restricted areas. By rotating through different proxies, the Nannostomus web scraping tool online masks the scraper’s activities, reducing the likelihood of being blocked and ensuring uninterrupted data collection.

Captchas are a common method websites use to verify a user is human. Our captcha solving service integrates with our scraping tools web to recognize and solve captchas. This enables our scrapers to maintain their operations without manual intervention.

Project management

The Nannostomus scraper tools web include a project management suite for streamlining the monitoring and administration of your scraping projects. This suite encompasses statistics, billing, and login.

The statistics module captures detailed logs of all scraping activities—the sources visited, tasks performed, and the time taken for each task. It allows you to analyze workflows and identify areas for improvement.

Keep track of costs with the billing feature. This module of our web scraping tool for money control records the cost associated with each activity. Thus, you get a clear breakdown of expenditure and can adjust your strategies according to the financial insights gained.

The login integrates with Amazon OpenSearch to collect and log detailed information about all scraping activities. This feature of our cloud web scraping tool allows you to monitor the system performance. As issues happen, you can analyze what actions have led to the problems and then adjust your flow for future projects.

The microservices system of our web scraping tool kit

The Nannostomus web scraping automation tool is made of independent services, which we covered above. The adoption of a microservices architecture brings significant benefits, particularly when managing complex, scalable web scraping operations.

You can scale each component in a microservices architecture independently. What this means for you is more efficient resource use and improved handling of increased loads. For example, if the demand for captcha-solving increases, you’ll scale up only the captcha-solving service without affecting other components. So, Nannostomus offers top web scraping tools for handling variable amounts of data and fluctuating loads with high efficiency.

Moreover, the failure of one service does not bring down the entire system. This means that an issue in one part of the web scraper tool online, won’t stop the data collection or other operations.

How to use Nannostomus easy web scraping tools

Using the Nannostomus online web scraping tools is straightforward. The software will integrate seamlessly into your existing workflows, especially if you’re already utilizing AWS for your infrastructure. Here’s how it works:

- Deployment. We deploy the Nannostomus tool directly into your AWS account. This means that the tool runs within your cloud environment and you gain complete control over the data and processes.

- Writing modules. You will use C# to write custom modules. These modules are scripts that instruct our web data scraping tools on what data to collect, from where, and how to handle that data. Even if you’re relatively new to C#, Nannostomus gives access to a library of pre-written code snippets, which simplifies the process of setting up your scrapers.

- Data storage. The data collected by Nannostomus web scraping tools online is automatically loaded into AWS storage solutions. Currently, we support AWS Elastic File System (EFS) and AWS Simple Storage Service (S3).

- Updates and patches. Once deployed, your Nannostomus setup will receive updates and patches automatically. They are pushed directly to your AWS account, ensuring that your scraper web tool is always equipped with the latest features and security enhancements without any manual intervention needed from your side.

Use the best web scraper tool from Nannostomus

At Nannostomus, we’ve engineered a cost-effective and remarkably efficient solution capable of handling 99.9% of web scraping tasks with minimal expenditure. Whether you’re extracting large datasets from a single or hundreds websites, our simple web scraper tool ensures you focus more on insights and less on the intricacies of data collection.

Get in touch with us today to explore how you can use Nannostomus scraping web tool for your project.